In today’s digital world, downtime can be detrimental to your business. Server downtime can cost you money and customers, leading to lost revenue and a damaged reputation. Optimizing your servers is essential to ensure uptime and performance. This article will explore several effective server optimization techniques to minimize downtime and keep your business running smoothly.

Understanding Downtime and Its Impact on Business

In the fast-paced world of today, businesses are constantly striving for efficiency and productivity. However, the reality is that every company experiences downtime, which refers to periods when operations are interrupted or halted. This can be caused by a variety of factors, including technical failures, maintenance, security breaches, natural disasters, and human error. While downtime is unavoidable, its impact on a business can be significant and far-reaching.

Financial Losses

One of the most immediate and tangible consequences of downtime is financial loss. When operations are disrupted, businesses may experience lost revenue, increased expenses, and decreased productivity. For example, an e-commerce website experiencing downtime could lose sales and potentially see customers turn to competitors. Additionally, the cost of resolving the issue and restoring operations can be substantial, further impacting the bottom line.

Customer Dissatisfaction

Downtime can significantly damage customer relationships. Customers expect seamless service and availability, and any disruptions can lead to frustration, dissatisfaction, and even loss of loyalty. For example, a bank experiencing an outage could see its customers unable to access their accounts, leading to frustration and potential loss of trust. It’s crucial for businesses to prioritize communication and transparency during downtime, keeping customers informed and providing updates on the situation.

Reputation Damage

Beyond immediate financial losses, downtime can also have long-term negative consequences for a company’s reputation. Frequent or lengthy outages can lead to a perception of unreliability and inefficiency. This can be especially detrimental to businesses that rely heavily on technology, such as software companies or online service providers. Maintaining a strong reputation is crucial for attracting and retaining customers, so minimizing downtime is essential.

Productivity and Efficiency

Downtime can also impact employee productivity and efficiency. When employees are unable to access critical systems or perform their tasks, it can lead to delays, frustration, and overall reduced output. Additionally, if downtime requires employees to work overtime or change their schedules to address the issue, it can lead to burnout and decreased morale.

Mitigation and Prevention

While downtime is unavoidable, businesses can take proactive measures to mitigate its impact and minimize its frequency. This includes implementing robust disaster recovery plans, investing in redundant systems, conducting regular maintenance, and providing employee training on cybersecurity and best practices. By taking these steps, businesses can build resilience and minimize the disruption caused by downtime.

Key Server Optimization Techniques

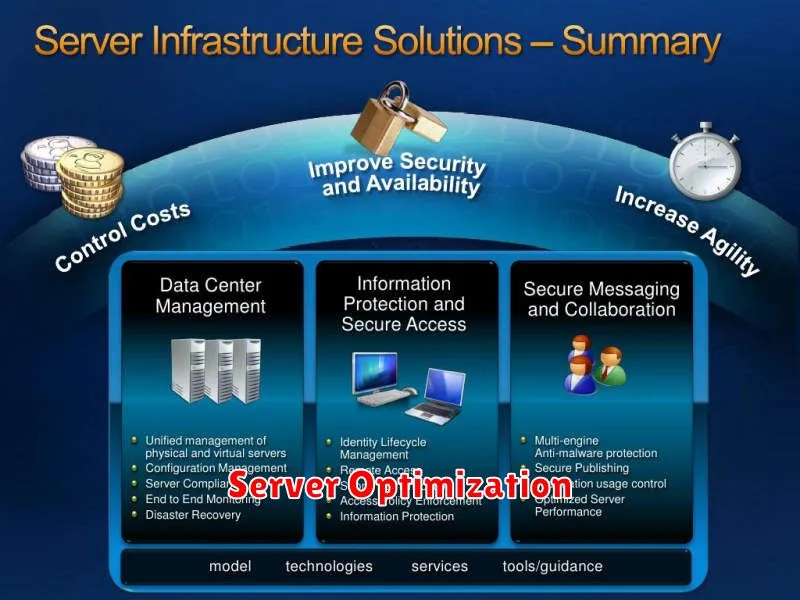

Server optimization is crucial for any website or application, as it directly impacts performance, user experience, and overall efficiency. By implementing various techniques, you can significantly enhance your server’s capabilities and ensure smooth operation. This article will delve into some key server optimization techniques that can make a world of difference.

1. Hardware Optimization

The foundation of server optimization lies in choosing the right hardware. Consider the following factors:

- CPU: Opt for a CPU with multiple cores and high clock speed for efficient processing.

- RAM: Adequate RAM is essential for handling multiple requests and minimizing loading times.

- Storage: Select fast storage solutions like SSDs for quick data access and improved performance.

2. Software Optimization

Software plays a vital role in server optimization. Here are some key areas to focus on:

- Operating System: Choose a lightweight and efficient operating system that minimizes resource consumption.

- Web Server: Select a robust web server like Apache or Nginx, known for their performance and scalability.

- Database Optimization: Tune your database for optimal query performance by indexing tables and optimizing database settings.

3. Code Optimization

Clean and optimized code is essential for efficient server performance. Follow these practices:

- Code Caching: Implement caching mechanisms to store frequently accessed data in memory, reducing database queries and improving response times.

- Code Minification: Reduce file sizes by removing unnecessary characters from your code, resulting in faster loading times.

- Image Optimization: Optimize images for web use by reducing file sizes and using appropriate formats like WebP.

4. Network Optimization

Network optimization is crucial for smooth data transfer and fast response times:

- Network Bandwidth: Ensure you have sufficient bandwidth to handle traffic spikes and maintain consistent performance.

- Network Latency: Minimize network latency by choosing a reliable hosting provider and optimizing network settings.

- Load Balancing: Distribute traffic across multiple servers to prevent overloading and ensure consistent availability.

5. Security Optimization

Security is paramount for server stability and data protection. Implement these measures:

- Firewall: Install a robust firewall to block unauthorized access and prevent malicious attacks.

- Regular Security Updates: Keep your software and operating system updated to patch vulnerabilities and protect against exploits.

- Strong Passwords: Use strong and unique passwords for all user accounts and administrative access.

6. Monitoring and Analysis

Constant monitoring and analysis are essential for identifying performance bottlenecks and optimizing server resources:

- Server Monitoring Tools: Utilize monitoring tools to track resource usage, performance metrics, and potential issues.

- Log Analysis: Analyze server logs to identify patterns, errors, and areas for improvement.

- Performance Testing: Conduct regular performance tests to evaluate response times, load capacity, and overall efficiency.

By implementing these key server optimization techniques, you can significantly enhance your server’s performance, reliability, and security. Remember that optimization is an ongoing process, so continuous monitoring and adjustments are crucial for maintaining optimal performance and achieving your desired results.

Load Balancing and Failover Strategies

In the realm of modern computing, ensuring high availability, performance, and resilience is paramount. To achieve these goals, system architects often employ load balancing and failover strategies. These techniques distribute incoming traffic across multiple servers, maximizing resource utilization and minimizing downtime in the event of failures.

Load Balancing

Load balancing is a technique that distributes incoming network traffic across multiple servers, known as server farms. This approach enhances performance by preventing a single server from becoming overloaded and ensures high availability by distributing the workload across multiple servers. The most common load balancing methods include:

- Round-robin: This method distributes requests to servers in a sequential order, cycling through them.

- Least connections: Requests are directed to the server with the fewest active connections.

- Weighted round-robin: This method assigns weights to servers, allowing for more powerful servers to handle more requests.

- IP hash: This technique uses the client’s IP address to determine the server that will handle the request.

Failover

Failover strategies ensure that if one server fails, another server takes over its responsibilities without interruption. This approach is crucial for mission-critical applications where downtime is unacceptable. Common failover techniques include:

- Active-passive: In this setup, one server is active, while the other is passive. The passive server takes over if the active server fails.

- Active-active: Both servers are active and handle requests simultaneously. If one server fails, the other takes over its load.

- Clustering: Servers are grouped together, and requests are distributed across them. If one server fails, the others take over its workload.

Benefits of Load Balancing and Failover

Implementing load balancing and failover strategies offers numerous benefits, including:

- Improved performance: By distributing traffic across multiple servers, load balancing reduces server load and improves overall system performance.

- Increased availability: Failover mechanisms ensure that even if one server fails, the application remains available, minimizing downtime.

- Enhanced scalability: Load balancing and failover enable seamless scaling of the system to accommodate increasing workloads without compromising performance.

- Cost efficiency: Utilizing existing server resources effectively reduces the need for additional hardware, leading to cost savings.

Conclusion

Load balancing and failover strategies are essential for building robust and scalable applications. These techniques ensure high availability, performance, and resilience, ultimately leading to improved user experience and business continuity.

Database Optimization for Performance

A well-optimized database is crucial for any application that relies on data storage and retrieval. It ensures fast response times, efficient resource utilization, and a smooth user experience. Database optimization involves a series of techniques and strategies aimed at enhancing performance, reducing resource consumption, and maximizing the effectiveness of your database system.

Understanding Database Performance

Database performance is measured by various factors, including query execution time, data retrieval speed, and the ability to handle concurrent requests. Slow database performance can manifest as sluggish application response times, unresponsive websites, and even system crashes. Optimizing your database is essential to prevent these issues and ensure optimal functionality.

Key Optimization Strategies

Several techniques can be implemented to optimize database performance, including:

- Indexing: Creating indexes on frequently accessed columns accelerates data retrieval by providing shortcuts for the database system.

- Query Optimization: Analyzing and improving SQL queries to reduce execution time and optimize resource usage is critical for performance.

- Data Normalization: Organizing data in a structured manner reduces redundancy and improves data integrity, leading to faster data access.

- Caching: Storing frequently used data in memory (cache) allows for rapid access, minimizing database lookups and improving performance.

- Database Tuning: Configuring database parameters, such as buffer pool size and query cache settings, can significantly impact performance.

- Hardware Optimization: Ensuring adequate hardware resources, including storage capacity, RAM, and CPU power, is crucial for optimal database performance.

Benefits of Database Optimization

Optimizing your database provides numerous benefits, including:

- Faster Response Times: Improved query performance results in faster data retrieval, leading to quicker application responses and enhanced user experience.

- Reduced Resource Consumption: Efficient queries and optimized data structures minimize resource usage, leading to cost savings and improved system stability.

- Increased Scalability: A well-optimized database can handle increased workloads and user traffic more effectively, ensuring smooth operation as your application grows.

- Enhanced Data Integrity: Proper normalization and data optimization contribute to a more robust and reliable database, reducing data corruption and errors.

Conclusion

Database optimization is an ongoing process that requires regular monitoring, analysis, and adjustments. By implementing the strategies outlined above, you can significantly improve the performance of your database, enhance application responsiveness, and ensure a smooth and efficient user experience.

Server Monitoring and Performance Tuning

Server monitoring and performance tuning are crucial aspects of ensuring the smooth and efficient operation of any system. By constantly monitoring server performance, system administrators can identify and address potential issues before they escalate into major problems. This proactive approach helps maintain uptime, prevent performance degradation, and optimize resource utilization.

Server monitoring involves collecting and analyzing data on various server metrics, such as CPU usage, memory consumption, disk space, network traffic, and application performance. This data provides valuable insights into the overall health and performance of the server.

Performance tuning, on the other hand, focuses on optimizing server configurations and resources to enhance performance. This involves identifying bottlenecks, analyzing resource consumption, and implementing adjustments to improve responsiveness and efficiency.

Key Benefits of Server Monitoring and Performance Tuning

The benefits of implementing effective server monitoring and performance tuning strategies are numerous:

- Improved Uptime and Reliability: Early detection and resolution of issues minimize downtime and ensure continuous service availability.

- Enhanced Performance: Optimized resource allocation and configuration lead to faster response times and improved application performance.

- Reduced Costs: By preventing performance degradation and unnecessary resource consumption, server monitoring and tuning can help lower operational expenses.

- Improved Security: Monitoring security metrics and identifying suspicious activity can help prevent breaches and protect sensitive data.

- Data-Driven Decision-Making: Real-time performance data provides valuable insights for making informed decisions about system upgrades, resource allocation, and capacity planning.

Tools and Techniques

A wide range of tools and techniques are available for server monitoring and performance tuning, including:

- Monitoring Software: Tools like Nagios, Zabbix, and Prometheus collect and analyze server data, providing alerts and reporting capabilities.

- Performance Profilers: Applications like JProfiler and VTune help identify performance bottlenecks within specific software applications.

- Log Analysis Tools: Log files provide valuable information about system events and errors, which can be analyzed using tools like Splunk and Elasticsearch.

- System Tuning: Configuring operating system parameters, such as kernel settings and memory management, can optimize performance for specific workloads.

- Application Optimization: Fine-tuning application code, database queries, and caching mechanisms can significantly improve application performance.

Best Practices

To effectively implement server monitoring and performance tuning, follow these best practices:

- Establish Clear Monitoring Objectives: Define the key performance indicators (KPIs) that are most relevant to your system and business goals.

- Implement Automated Monitoring: Leverage software tools to collect and analyze data continuously without manual intervention.

- Set Up Alerts and Notifications: Configure alerts to notify administrators of critical events and potential issues.

- Analyze Performance Trends: Identify patterns and anomalies in performance data to predict and prevent problems.

- Regularly Review and Tune: Continuously evaluate performance and make adjustments to system configurations and resources as needed.

By consistently monitoring and tuning your servers, you can ensure their optimal performance, minimize downtime, and achieve your business objectives.

Implementing a Content Delivery Network (CDN)

A Content Delivery Network (CDN) is a geographically distributed network of servers that deliver web content to users based on their location. This helps to improve website performance and user experience by reducing latency and improving website speed.

CDNs work by caching static content, such as images, CSS files, and JavaScript files, on servers located closer to users. When a user requests a page, the CDN server delivers the content from the closest server, reducing the distance the data must travel. This results in faster loading times and improved user experience.

Benefits of Using a CDN

There are many benefits to using a CDN, including:

- Improved website performance: CDNs reduce latency and improve website speed, resulting in a better user experience.

- Increased website availability: CDNs can help to improve website availability by distributing website traffic across multiple servers. This means that if one server goes down, the website will still be accessible.

- Reduced bandwidth costs: CDNs can help to reduce bandwidth costs by caching content closer to users. This means that less data needs to be transmitted from the origin server.

- Enhanced security: CDNs can help to enhance security by providing features such as DDoS protection and SSL encryption.

How to Implement a CDN

Implementing a CDN is a relatively straightforward process. The first step is to choose a CDN provider. There are many reputable CDN providers available, such as CloudFlare, Akamai, and Amazon CloudFront.

Once you have chosen a provider, you will need to sign up for an account and configure your CDN settings. This typically involves adding your website’s domain name to the CDN and configuring the CDN’s cache settings. Some CDN providers offer a free tier, while others charge a fee based on bandwidth usage.

After you have configured your CDN settings, you can begin using it to deliver your website’s content. The CDN will automatically start caching your static content and delivering it to users based on their location.

Conclusion

Implementing a CDN is a great way to improve website performance, availability, and security. It is a relatively easy process that can be completed in a few steps. By using a CDN, you can provide your users with a better experience and improve your website’s overall performance.

Best Practices for Server Maintenance

Server maintenance is crucial for ensuring the smooth operation and optimal performance of your IT infrastructure. By implementing best practices, you can minimize downtime, enhance security, and optimize resource utilization. Here are some key strategies to consider:

Regular Monitoring and Logging

Regularly monitoring your servers is essential for identifying potential issues early on. Implement monitoring tools that track key metrics such as CPU usage, disk space, memory consumption, and network traffic. Enable comprehensive logging to capture system events, errors, and security incidents. This data provides valuable insights for troubleshooting and identifying patterns that could indicate problems.

Regular Updates and Patching

Software vulnerabilities are a constant threat, making regular updates and patching critical. Stay up-to-date on security advisories and promptly apply patches for operating systems, applications, and firmware. Prioritize patching critical vulnerabilities and conduct thorough testing before deploying updates to production environments.

Backup and Disaster Recovery

Having a robust backup and disaster recovery plan is essential for minimizing data loss in the event of a server failure or other unforeseen incident. Implement regular backups of critical data and system configurations. Test your recovery procedures periodically to ensure they are effective and up-to-date.

Performance Optimization

Optimize server performance by identifying and addressing bottlenecks. Use performance analysis tools to identify areas for improvement, such as inefficient code, excessive resource usage, or network congestion. Consider upgrading hardware, optimizing database queries, or implementing caching mechanisms to enhance server responsiveness.

Security Best Practices

Implement strong security measures to protect your servers from unauthorized access and malicious attacks. Use strong passwords, enable multi-factor authentication, regularly review user permissions, and install firewalls and intrusion detection systems. Stay informed about emerging security threats and adapt your security posture accordingly.

Documentation and Training

Maintain comprehensive documentation of your server infrastructure, including configuration settings, security policies, and troubleshooting procedures. Provide training to IT staff on server maintenance procedures, security best practices, and disaster recovery plans. Well-documented processes and trained personnel ensure a smooth and efficient operation.

Conclusion

By implementing these best practices, you can significantly enhance server reliability, security, and performance. Proactive maintenance reduces the risk of downtime, minimizes data loss, and optimizes resource utilization, ensuring your IT infrastructure operates smoothly and meets your business needs.

Case Studies: Successful Downtime Reduction

Downtime is a major concern for any business, especially those that rely on production or service delivery. Even a few minutes of downtime can have a significant impact on productivity, revenue, and customer satisfaction. Therefore, it is crucial for businesses to prioritize downtime reduction and implement effective strategies to minimize its occurrence.

This article presents several case studies of businesses that have successfully reduced downtime and improved their overall operational efficiency. These examples demonstrate the power of proactive planning, robust technology, and a commitment to continuous improvement.

Case Study 1: Manufacturing Company

A large manufacturing company faced frequent production line stoppages due to equipment failures. The company implemented a predictive maintenance program using IoT sensors and data analytics. The sensors monitored key equipment parameters in real-time, allowing for early detection of potential issues. This enabled the company to schedule maintenance proactively, preventing breakdowns and reducing downtime by 40%.

Case Study 2: Online Retailer

An online retailer experienced frequent website crashes during peak shopping seasons. The company implemented a cloud-based infrastructure with automated failover mechanisms. This ensured that traffic was automatically redirected to backup servers in case of an outage. The new infrastructure significantly improved website reliability, reducing downtime by 90% during peak periods.

Case Study 3: Healthcare Provider

A healthcare provider faced challenges in managing patient data due to outdated systems. The company implemented a cloud-based electronic health record (EHR) system that provided improved data security and accessibility. The new system streamlined data management processes and reduced downtime associated with system failures, resulting in a 75% improvement in patient care efficiency.

Key Takeaways

These case studies demonstrate the importance of proactive downtime reduction strategies. By implementing effective technologies, adopting a data-driven approach, and focusing on continuous improvement, businesses can significantly minimize downtime and improve their overall operational performance. Here are some key takeaways:

- Invest in robust technologies: Utilize IoT sensors, cloud infrastructure, and advanced analytics to monitor equipment performance and predict potential issues.

- Embrace a data-driven approach: Analyze downtime data to identify root causes and implement targeted solutions.

- Foster a culture of continuous improvement: Regularly review downtime reduction strategies and adapt them based on evolving needs and technological advancements.

By learning from these successful case studies, businesses can gain valuable insights into the best practices for effectively reducing downtime and ensuring business continuity. The benefits of proactive downtime management are undeniable, leading to improved productivity, increased revenue, and enhanced customer satisfaction.

Choosing the Right Server Optimization Tools

Server optimization is a crucial aspect of ensuring optimal website performance. With a plethora of tools available, choosing the right ones can be a daunting task. This article will guide you through the process, providing a comprehensive overview of key considerations and popular options.

Firstly, define your goals. What are you trying to achieve? Do you want to reduce page load times, improve resource utilization, enhance security, or all of the above? Your specific needs will determine the type of tools you require.

Next, consider your technical expertise. Some tools are highly technical and require advanced knowledge, while others are user-friendly and accessible to beginners. Choose tools that align with your technical capabilities.

Budget is another critical factor. Server optimization tools vary in price, ranging from free to premium subscriptions. Determine how much you are willing to spend and select tools that fit your budget.

Now, let’s explore some popular server optimization tools:

Performance Monitoring Tools

Performance monitoring tools provide insights into server performance metrics, helping you identify bottlenecks and areas for improvement. Some popular options include:

- New Relic

- Datadog

- Pingdom

Caching Tools

Caching tools store copies of frequently accessed content, reducing server load and improving page load times. Some popular choices include:

- W3 Total Cache

- WP Super Cache

- Cloudflare

Security Tools

Security tools protect your server from vulnerabilities and malicious attacks. Some commonly used options include:

- Sucuri

- Wordfence

- CloudFlare

Other Tools

Beyond performance, caching, and security, there are other tools that can enhance server optimization. These include:

- Load balancers

- Content delivery networks (CDNs)

- Server management panels

Choosing the right server optimization tools is crucial for ensuring a smooth and efficient website experience. By carefully considering your needs, technical expertise, budget, and the available options, you can select the tools that will optimize your server and enhance your website’s performance.

Future Trends in Server Optimization

Server optimization is a crucial aspect of ensuring smooth and efficient operation for any website or application. As technology continues to evolve rapidly, server optimization techniques are also constantly being refined and new trends are emerging.

One of the most significant trends is the rise of serverless computing. Serverless architectures allow developers to focus on writing code without having to manage the underlying infrastructure. This eliminates the need for traditional server provisioning and management, leading to increased efficiency and cost savings.

Another key trend is the increasing adoption of cloud-native technologies. Cloud-native applications are designed and built specifically for cloud environments, enabling scalability, resilience, and agility. This shift towards cloud-native architectures will further drive the need for optimized server configurations and efficient resource utilization.

Artificial Intelligence (AI) is also playing a transformative role in server optimization. AI-powered tools can analyze server performance data and identify bottlenecks, allowing for proactive optimization and improved efficiency. AI can also be used to automate server management tasks, reducing human intervention and increasing reliability.

Edge computing is another emerging trend that will significantly impact server optimization. Edge computing brings computation and data storage closer to users, reducing latency and improving performance. This trend will require optimized server configurations and efficient resource allocation at the edge.

In conclusion, server optimization is an ever-evolving field with numerous trends shaping its future. Serverless computing, cloud-native technologies, AI, and edge computing are all key drivers of innovation, leading to more efficient and scalable server infrastructure.